Welcome to visit.

This site collected the notes I made during work over the years. I created it for my own reference initially, then think it could be a good idea to share with everyone.

Hope you find it useful at some point.

Database

- How to list all tables and describe tables in oracle

- How to start, stop and restart oracle listener

- Finding which table contains a column in the oracle

- How to check oracle host name

- Install oracle for ruby-oci8 on ubuntu

How to List All Tables and Describe Tables in Oracle

Connect to the database:

sqlplus username/password@database-name

To list all tables owned by the current user, type:

select tablespace_name, table_name from user_tables;

To list all tables in a database:

select tablespace_name, table_name from dba_tables;

To list all tables accessible to the current user, type:

select tablespace_name, table_name from all_tables;

How To Start, Stop and Restart Oracle Listener

Starting up and shutting down the oracle listener is a routine task for a database administrator. However a Linux system administrator or programmer may end-up doing some basic DBA operations on development database. It is critical for non-DBAs to understand the basic database admin activities.

In this article, let us review how to start, stop, check status of an oracle listener using Oracle listener control utility LSNRCTL.

Also refer to our earlier article about how to start and stop the Oracle database

How To Start, Stop and Restart Oracle Listener

1. Display Oracle Listener Status

Before starting, stopping or restarting make sure to execute lsnrctl status command to check the oracle listener status as shown below. Apart from letting us know whether the listener is up or down, you can also find the following valuable information from the lsnrctl status command output.

- Listner Start Date and Time.

- Uptime of listner – How long the listener has been up and running.

- Listener Parameter File – Location of the listener.ora file. Typically located under $ORACLE_HOME/network/admin

- Listener Log File – Location of the listener log file. i.e log.xml

If the Oracle listener is not running, you’ll get the following message.

$ **lsnrctl status**

LSNRCTL for Linux: Version 11.1.0.6.0 - Production on 04-APR-2009 16:27:39

Copyright (c) 1991, 2007, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=192.168.1.2)(PORT=1521)))

TNS-12541: TNS:no listener

TNS-12560: TNS:protocol adapter error

TNS-00511: No listener

Linux Error: 111: Connection refused

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=EXTPROC)))

TNS-12541: TNS:no listener

TNS-12560: TNS:protocol adapter error

TNS-00511: No listener

Linux Error: 2: No such file or directory

If the Oracle listener is running, you’ll get the following message.

$ **lsnrctl status**

LSNRCTL for Linux: Version 11.1.0.6.0 - Production on 04-APR-2009 16:27:02

Copyright (c) 1991, 2007, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=192.168.1.2)(PORT=1521)))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 11.1.0.6.0 - Production

Start Date 29-APR-2009 18:43:13

Uptime 6 days 21 hr. 43 min. 49 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/oracle/product/11.1.0/network/admin/listener.ora

Listener Log File /u01/app/oracle/diag/tnslsnr/devdb/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.2)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC)))

Services Summary...

Service "devdb" has 1 instance(s).

Instance "devdb", status UNKNOWN, has 1 handler(s) for this service...

Service "devdb.thegeekstuff.com" has 1 instance(s).

Instance "devdb", status READY, has 1 handler(s) for this service...

Service "devdbXDB.thegeekstuff.com" has 1 instance(s).

Instance "devdb", status READY, has 1 handler(s) for this service...

Service "devdb_XPT.thegeekstuff.com" has 1 instance(s).

Instance "devdb", status READY, has 1 handler(s) for this service...

The command completed successfully

2. Start Oracle Listener

If the Oracle listener is not running, start the listener as shown below. This will start all the listeners. If you want to start a specific listener, specify the listener name next to start. i.e lsnrctl start [listener-name]

$ **lsnrctl start**

LSNRCTL for Linux: Version 11.1.0.6.0 - Production on 04-APR-2009 16:27:42

Copyright (c) 1991, 2007, Oracle. All rights reserved.

Starting /u01/app/oracle/product/11.1.0/bin/tnslsnr: please wait...

TNSLSNR for Linux: Version 11.1.0.6.0 - Production

System parameter file is /u01/app/oracle/product/11.1.0/network/admin/listener.ora

Log messages written to /u01/app/oracle/diag/tnslsnr/devdb/listener/alert/log.xml

Listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.2)(PORT=1521)))

Listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC)))

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=192.168.1.2)(PORT=1521)))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 11.1.0.6.0 - Production

Start Date 04-APR-2009 16:27:42

Uptime 0 days 0 hr. 0 min. 0 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/oracle/product/11.1.0/network/admin/listener.ora

Listener Log File /u01/app/oracle/diag/tnslsnr/devdb/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.2)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC)))

Services Summary...

Service "devdb" has 1 instance(s).

Instance "devdb", status UNKNOWN, has 1 handler(s) for this service...

The command completed successfully

3. Stop Oracle Listener

If the Oracle listener is running, stop the listener as shown below. This will stop all the listeners. If you want to stop a specific listener, specify the listener name next to stop. i.e lsnrctl stop [listener-name]

$ **lsnrctl stop**

LSNRCTL for Linux: Version 11.1.0.6.0 - Production on 04-APR-2009 16:27:37

Copyright (c) 1991, 2007, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=192.168.1.2)(PORT=1521)))

The command completed successfully

4. Restart Oracle Listener

To restart the listener use lsnrctl reload as shown below instead of lsnrctl stop and lsnrctl start. realod will read the listener.ora file for new setting without stop and start of the Oracle listener.

$ **lsnrctl reload**

LSNRCTL for Linux: Version 11.1.0.6.0 - Production on 04-APR-2009 17:03:31

Copyright (c) 1991, 2007, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=192.168.1.2)(PORT=1521)))

The command completed successfully

Oracle Listener Help

1. View Available Listener Commands

lsnrctl help command will display all available listener commands. In Oracle 11g following are the available listener commands.

-

start - Start the Oracle listener

-

stop - Stop the Oracle listener

-

status - Display the current status of the Oracle listener

-

services - Retrieve the listener services information

-

version - Display the oracle listener version information

-

reload - This will reload the oracle listener SID and parameter files. This is equivalent to lsnrctl stop and lsnrctl start.

-

save_config – This will save the current settings to the listener.ora file and also take a backup of the listener.ora file before overwriting it. If there are no changes, it will display the message “No changes to save for LISTENER”

-

trace - Enable the tracing at the listener level. The available options are ‘trace OFF’, ‘trace USER’, ‘trace ADMIN’ or ‘trace SUPPORT’

-

spawn - Spawns a new with the program with the spawn_alias mentioned in the listener.ora file

-

change_password – Set the new password to the oracle listener (or) change the existing listener password.

-

show - Display log files and other relevant listener information.

$ **lsnrctl help** LSNRCTL for Linux: Version 11.1.0.6.0 - Production on 04-APR-2009 16:12:09 Copyright (c) 1991, 2007, Oracle. All rights reserved. The following operations are available An asterisk (*) denotes a modifier or extended command: start stop status services version reload save_config trace spawn change_password quit exit set* show*

2. Get More help on Specific Listener Command

You can get detailed help on a specific oracle listener command as shown below. In the following example, it gives all the available arguments/parameters that can be passed to the lsnrctl show command.

$ **lsnrctl help show**

LSNRCTL for Linux: Version 11.1.0.6.0 - Production on 04-APR-2009 16:22:28

Copyright (c) 1991, 2007, Oracle. All rights reserved.

The following operations are available after show

An asterisk (*) denotes a modifier or extended command:

rawmode displaymode

rules trc_file

trc_directory trc_level

log_file log_directory

log_status current_listener

inbound_connect_timeout startup_waittime

snmp_visible save_config_on_stop

dynamic_registration

Finding which table contains a column in the oracle

SELECT TABLE_NAME FROM USER_TAB_COLUMNS WHERE COLUMN_NAME='id'

How to check oracle host name

sql> **select host_name from v$instance;**

Install Oracle for ruby-oci8 on Ubuntu

Here is the very nice page describing all the details:

http://2muchtea.wordpress.com/2007/12/23/installing-ruby-oci8-on-ubuntu/

In short, download the below packages to somewhere on your system

instantclient-basic-linux-11.2.0.3.0.zip

instantclient-sdk-linux-11.2.0.3.0.zip

instantclient-sqlplus-linux-11.2.0.3.0.zip

and unzip them, say, in /opt/oracle/instantclient_11_2.

Then append the following lines into your .profile or .bashrc:

export PATH=$PATH:/opt/oracle/instantclient_11_2:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/oracle/instantclient_11_2

export ORACLE_HOME=/opt/oracle/instantclient_11_2

export TNS_ADMIN=/opt/oracle/instantclient_11_2

Note the orignal post was wrong about envrionment variable TNS_ADMIN. It should be TNS_ADMIN, not TNSADMIN.

Create your tnsnames.ora in /opt/oracle/instantclient_11_2. That's all.

Assume you it looks like this:

dbhost=

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = 10.216.21.75)(PORT = 1521))

)

(CONNECT_DATA =

(SERVICE_NAME = DP9A)

)

)

Now you connect with

sqlplus username/password@dbhost

That's all.

Windows tips

- Clear case

- Windows 7: customize how the date is displayed in the system tray

- How to stream your video from windows to tv via dlna

- Mapping/mounting drive letters to local folders in windows

- Sync lotus notes calendar with google calendar

- Win7家庭版显示或隐藏用户帐户

- How to create a bootable windows 7 usb flash drive

- 修改windows系统时间同步间隔时间

- Use putty as cygwin terminal

- 让win7资源管理器默认打开计算机而不是库文件夹的方法

- Dos常用命令

- Windows 7 - ms-dos bootable flash drive - create

- How to suspend/hibernate windows 7 from command line

- 键盘多媒体键的一些研究心得

- 140个绝对绝对值得收藏的电脑技巧

- Freeware

- How to access metro style apps installation folder in windows 8

- Finding which cygwin package contains a particular file

- Windows 7 使用 ipv6 翻墙

- Open new instance for each excel sheet

- Fix: “the selected file is not a valid iso file” error in windows 7 usb/dvd download tool

- Clear case

- 用ntloader来引导linux

- How did i enable alcor micro smart card reader in virtualbox

- How to disable ads on skype

- Disk usage tool

- Open excel in new window instance (very useful when you have dual monitors)

- File type association commands on windows

- Change login screen on windows 7

Clear case

ClearCase Support: Understanding Config Specs

I recently had a question concerning how to understand configuration specifications or config specs in ClearCase Views, so here goes.

A config spec is the mechanism that a ClearCase View determines what versions of an element that the user accesses. A config spec is only editable, by default, by the account that created the View. A config spec has a single rule on each line, and the lines are interpreted by ClearCase from the top to the bottom as the order of importance. For example, when you create a new ClearCase View, the default config spec is set to this:

element * CHECKEDOUT

element * /main/LATEST

Each rule basically consists of three parts. First the word "element", second what element to find, and third is the version to access. In this default config spec example, the first rule says to access the checkedout version of the element if the current View has the element checkedout for each element. If the View does not have the element checkedout, then the next rule is interpreted. In this example, the next rule dictates that the View will access the latest version of the element on the /main/ branch. This rule will guarantee to find a version to access, so any further rules, if any existed, will be ignored.

Lets take a look at this more complicated config spec with example #2:

element /vob/test/a.txt /main/3

element /vob/test/b.txt /main/4 # This is a comment.

#element * /main/LATEST

# The previous line is a comment, thus completely ignored.

element /vob/test/ /main/LATEST

The first rule states to only access the /main/3 version of the element "/vob/test/a.txt". This element may or may not exist, and ClearCase has no verification. Any other elements of a different path will ignore this rule. The second rule states to only access the /main/4 version of a different element called "/vob/test/b.txt". Note that anything after the first # symbol is a comment and is ignored. The third and fourth lines are comments, so they will be ignored even though they may have embedded rules. The fifth line says for all elements in the VOB call "/vob/test", access the latest versions on the /main/ branch, unless a previous rule already selected a version. Note that there are no rules in this config spec to access any versions in any other VOB, so all other VOBs will be inaccessible with this config spec.

You may want to take a mental break now, since the next example is much more complicated. If you dont know what are labels or branches yet, I recommend reading the other training web pages first. Here is example #3:

element /vob/training/hockey/ HOCKEY_LABEL

element /vob/training/baseball/ BASEBALL_LABEL

element /vob/training/football/ /football_branch/LATEST

element /vobs/training/ /main/LATEST

The first line says to access only the versions that have a label called "HOCKEY_LABEL" in the directory called /vobs/training/hockey. Not all files (or sub-directories) in this directory may have this rule, so these elements will not be accessed from this rule. Similarly, the second line says to access only the versions that have a label called "BASEBALL_LABEL" in the directory called /vobs/training/baseball. The third rule says to access the latest versions in the "/vob/training/football" directory on the /football_branch/ branch if that branch exists for each element. Otherwise the fourth rule says to access all other elements that the previous rules did not define to access by accessing the latest versions in the "/vob/training/" directory on the /main/ branch.

Confused yet? Well, it gets MUCH more complicated. Here is example #4:

element /vob/test/a.txt -none

element b.txt -none

element * /main/test/LATEST

element Cfile * /main/LATEST

element -directory * /main/LATEST

The first rule says to not access any versions of the element "/vob/test/a.txt". The second rule says to not access any versions that have the element name "b.txt" even if multiple files have the same filename in different directories. I strongly to always use the full paths when modifying config specs, otherwise unintended consequences may happen. The third rule says to access all the latest versions on the /main/test/ branch. Well, this is a bad example, because what if the user wanted to access versions on the /test/ branch, but the /test/ branch was not branching from the /main/ branch? For example /main/abc/test/3 would not be seen in this config spec. The better solution for this line would be "element * /test/LATEST".

The fourth rule says to access the latest versions of all files on the /main/ branch. The fifth rule says to access the latest versions of all directories on the /main/ branch too. The fourth and fifth rules combined are equal to "element * /main/LATEST", but there are sometimes reasons to handle directories and files differently.

Here is most confusing config spec example, and the most likely to be seen in the real world. Here is example #5:

element * CHECKEDOUT

element * /developers_branch/LATEST

element -file * RELEASED_LABEL -mkbranch developers_branch

element -file * /main/LATEST -mkbranch developers_branch

element * /main/LATEST

The first rule states to access the checkedout version if the current View has the element checkedout. The second rule states to access the latest version on the branch called /developers_branch/ if the branch exists. This is where the software developers typically make their code or documentation changes on their own personal branch. Each developer should have a unique branch for each change they are implementing too. The developer must have already created the branch type manually for this line to work correctly.

If the element is not already being modified on the developers branch, then the third rule will access the version for files that were labeled using the label called "RELEASED_LABEL", if the label exists. Furthermore, this is the version that will be branched from if the developer tries to checkout, if this label exists. The fourth rule is the same rule as the third rule, except this is for all files that do not have the label called "RELEASED_LABEL", so that new files can be added to source control and be accessed and modified accordingly. Finally, the fifth rule is for all the remaining elements, such as directories, to access the /main/LATEST versions and checkouts will not be on the developers branch.

I hope this explanation was clear, otherwise here is how to contact me for free advice.

by Phil B. From http://www.philforhumanity.com/ClearCase_Support_17.html

Clear Case commands

cleartool serview <owername>

cleartool lsview |grep <owername>

cleartool catcs

cleartool setcs <owername>

cleartool rmview -f -tag <owername>

cleartool edcs

cleartool lsco -r -me

cleartool lsco -r |grep <owername>

cleartool pwv

cleartool lsprivate | grep -v checkedout | xargs rm -rf

cleartool mkview -stgloc ims_1views -tag mgc5.2_dim_econzho

cleartool co -unr -nc filename

cleartool ci -nc filename

cleartool unco filename

makepack

Windows 7: Customize How the Date is Displayed in the System Tray

By default the Windows 7 taskbar uses large icons, which makes for a bigger taskbar. This easily allows not only the time to show in the system tray, but the date as well. You can customize how the date is displayed and what information it will show.

- Go to the Control Panel.

- Select Clock, Language, and Region.

- Select Region and Language.

- Click the Additional settings button.

- Select the Date tab.

- Go to the Date formats section. Use the Short date dropdown to select the desired display. Click the Apply button to view the results.

If you don’t see what you want, you can add your own by placing your cursor on the dropdown and typing.

You will need to keep these notations in mind:

d, dd are for displaying the date

ddd, dddd are for displaying the day of the week

M, MM, MMM, MMMM are for displaying the month

yy, yyyy are for displaying the year.

By using these notations and seperators such as hyphens, commas, periods, etc. you can customize your date to display in a number of ways.

For example:

ddd MM/dd/yy

will make your date look like this:

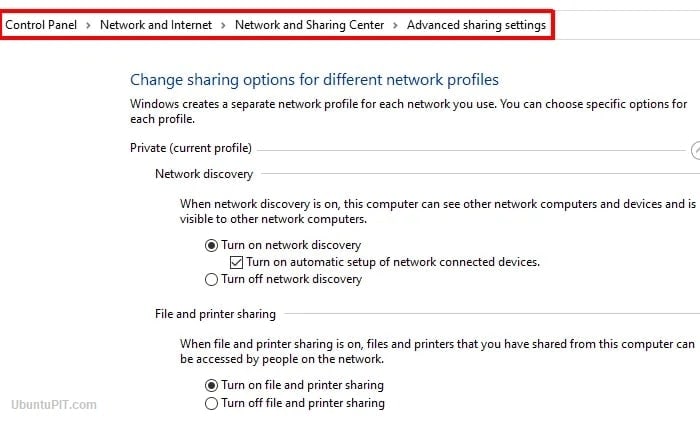

How to stream your video from Windows to TV via DLNA

It’s a fact: Gone are the days that homes are built with computer rooms and family/living rooms in their floor plans. The future of sit-down family entertainment is here, and dear lord its about time. This month, my family decided to purchase a nice, new Samsung Series 6 6300 LED TV and a brand new Samsung Blu-Ray player. Now this purchase is not something new to the modern home, but in this case something new was hiding behind that TV that made the situation different. Something exciting. It was…a LAN connection.

Yes, you heard me right. A LAN connection on a HDTV. And let’s be honest, it made perfect sense. As the realm of internet connectivity and availability expands in our lives, the lines between specific devices doing specific things are getting blurred. If phones can tweet, web surf, and even become a router via tethering, why can’t televisions connect to your home internet network? A web-enabled TV in the living room is just plain, simple natural evolution.

Okay so maybe all this is old news to most techies out there. But regardless, if Microsoft has been advertising this innovation for years or not, I just had to try it out for myself.

Getting Started

For my computing test bed, I used an Asus 1005HA netbook running Windows 7 Home Premium and connected to the local family network. On the TV end, Samsung has a built-in program called AllShare that lets the TV connect and play media from the local area network. This type of TV connectivity is in part thanks to the Digital Living Network Alliance(hence the acronym DLNA), a coordinated effort of major companies to allow inter-accessibility between devices and products. Since the main point of DLNA (and Samsung’s AllShare) is to connect media, of course Windows 7 and Windows Media Player fits the bill quite well.

Setting Up Windows Media Player

Using homegroups and Windows Media Player in Windows 7 is easy. First, make sure all your photos, videos, and music are in the right libraries and that you are allowing them to be shared in your homegroup. Then, load Windows Media Player and hit the nice big “Stream” button in the middle to begin the TV/computer sharing fun.

Windows Media Player allows two methods of sharing your files with your home network: allowing devices to play your media, and allowing full remote access to your media. But before these can be explored, let’s set up Windows Media Player by allowing internet access to your home media. To ensure that your media is not being accessed by hooligans and ruffians on the internet, Windows 7 does this by linking your media access to your Windows Live account. Sounds safe enough, right?

Once this is done, Windows Media Player is ready to allow media to be allowed on DLNA TV.

Sending Media to Your TV

Like I mentioned before, there are two ways to show your media on your TV through Windows Media Player (weren’t you listening?). Here is method one: pushing media to the TV while using your computer. First, we need to allow devices to play any media.

As you can see by the nice screenshot above, the Samsung TV is not only on the network but has been allowed to see my shared media. Next, let’s find a file to push to the TV. This is done by right clicking on the file and picking the “Play To” option in Windows Media Player.

If all goes well, your TV should ask if you want to play the video (just in case you changed your mind). After a tough choice of clicking either “Allow” or “Deny,” your video should start streaming to your TV.

As seen on your TV

As seen on your computer screen

date: November 29, 2005, 7:01pm PST author(s): Greg Shultz

Mapping/mounting drive letters to local folders in Windows

If you regularly work with files stored in shared folders on a Windows XP network, chances are that you've used Windows' Map Network Drive command to map a drive letter to that folder. Wouldn't it be nice if you could map a drive letter to a nested folder on your hard disk? Then, you could access nested subfolders just as easily as you can access shared folders on the network.

Fortunately, you can do just that. Unbeknownst to most Windows users, there's an old DOS command called Subst that's designed to associate a drive letter with any local folder—and it's still a viable tool in Windows XP. Here's how to use the Subst command:

- Open a Command Prompt window.

- Type the following command and press [Enter]:

subst x: C:\{pathname}\foldername}

In the command, x: is any available drive letter and { pathname }\ foldername } is the complete path to your selected folder. For example:

subst x: C:\cygwin-linux\home\handanie\workplace\RAT-TVP\fixtures\apduwrapper

Now, instead of typing the full path, you can reach the Drivers folder by accessing drive x: in Windows Explorer.

sync lotus notes calendar with google calendar

Setup Info:

Google now requires applications like LNGS to use an OAuth 2.0 Client ID to sign into Google Calendar.

You must create your own Client ID as follows:

-

Click Create Project.

-

Set Project Name to something like "LN Cal Sync". You can leave Project ID alone.

-

Click Create.

-

On the left side, click APIs & Auth.

-

Turn the Calendar API to ON and everything else OFF.

-

On the left side, click Credentials.

-

Click Create New Client ID.

-

Click Installed Application then select Other.

-

Click Create Client ID.

-

Click Download JSON.

-

Save the file into the same dir as lngsync.jar. It will have a long name like client_secret_760911730022-0nbs07o6o6qqc3ru4guooasalmrvbo89.apps.googleusercontent.com.json. Note: This file may be renamed to a shorter name because LNGS will look for any file named client_secret*.json.

-

Run LNGS and do a sync. A web browser window will open asking you to authorize Google Calendar access. After the authorization is complete, LNGS should be able to connect to Google Calendar automatically. If the web browser doesn't open, try deleting the credential file which is in the main LNGS directory and is named client_credential.

win7家庭版显示或隐藏用户帐户

首先关闭UAC,在控制面板里-用户帐户里面-选择”打开或关闭用户帐户控制” 去掉勾号,重启电脑。

如果想显示管理员,

- 使用安装时创建的帐号登陆windows7。

- 开始—>所有程序—>附件—>在“命令提示符”上右击—>选择“以管理员身份运行”—>“允许” 。

- 在打开的命令提示符窗口,输入”regedit”,回车,打开了注册表程序窗口。

- 进入HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows NT\CurrentVersion\Winlogon

- 在Winlogon上右击—>新建—项(k),名称为SpecialAccounts

- 在刚新建的SpecialAccounts上右击—>新建—>项(k),名称为UserList

- 在刚新建的UserList上右击—>新建—>DWORD (32-位)值(D),名称为Administrator,然后双击它,把它的键值改为1

- 在命令提示符窗口输入:net user administrator /active:yes 并回车。稍等就有成功提示。

- 注销或重启,就可以看到超级管理员administrator账户显示出来了!默认没有密码,可以登录啦!

【Daniel's note】其他用户不需要进行3-7部,只需将administrator改为相应的用户名即可。

如果想禁用,在命令行cmd中输入:net user administrator /active:no

How to create a bootable Windows 7 USB flash drive

The USB flash drive has replaced the floppy disk drive as the best storage medium for transferring files, but it also has its uses as a replacement for CDs and DVDs. USB drives tend to be higher in capacity than disc media, but since they are more expensive, they cannot (yet) really be used as a replacement. There are reasons why you would, however, choose a USB device over a DVD disc, and bootable software is definitely one of them. Not only is it faster to copy data such as setup files from a USB drive, but during usage the access times are also significantly faster. Therefore, installing something like Windows 7 will work that much faster from a USB drive than from a DVD (and of course, is particularly useful for the PCs without an optical drive; this isn't something we should just leave for the pirates to enjoy).

This guide will show you two different ways to create a USB flash drive that works just like a Windows 7 DVD. In order to follow this guide, you'll need a USB flash drive with at least 4GB of free space and a copy of the Windows 7 installation disc.

Windows 7 USB DVD Download Tool

You are normally given this tool when you purchase from the online Microsoft Store.

The easiest way to turn a USB flash drive into a bootable Windows 7 installer is by using the tool Microsoft offers, cunningly named the Windows 7 USB/DVD Download Tool. To get started, download the installer [exe] from Microsoft.com and follow the basic steps to put it onto your computer; you can put it on the computer you plan to install Windows 7 on or another one, it doesn't matter.

Once it is installed, it should create an icon on your desktop, so double-click that to open. If you can't find it, use the search function in the Start Menu with a keyword like "USB." Launching it should give you the above screen, and step one is to find the Windows 7 .ISO file. The tool only accepts .ISO images, so we recommend that you convert yours if it's in a different DVD image format.

Step two is straightforward: simply choose USB device.

In step three, all you have to do is make sure that you are choosing the correct USB device. If you have other data on the device, move it to your hard drive, another USB device, or somewhere else before proceeding.

The tool will prompt you if it detects data on the device. Once your data is backed up elsewhere, click Erase USB Device.

You will get another prompt warning you that all the data will be wiped. Click Yes to continue.

The format will be very quick, while the copying of the files will take a little bit more time (about 10 to 15 minutes).

Once the process is complete, you should get the above confirmation message. At this point you can close the tool and use the USB drive to install Windows 7. Remember that you'll have to choose to boot off the USB drive. Before doing so, you may want to open up the USB drive and double click on setup.exe to see if everything looks okay. If you want to be able to do this manually, see the next section, and if you want to be able to install any edition of Windows 7, skip to the section after that.

修改Windows系统时间同步间隔时间

我的主板也不晓得咋的,也许我每天会断开电源后才去睡觉的缘故,每天时间会无缘无故慢5分钟左右,这个是我绝对不允许的,于是要用到windows时间同步,但是这个东西默认的间隔时间太变态了,至少对我来说是如此。因为是604800秒,也就是7天,我日,要慢半小时了。我的要求是,一小时给我校对一次,于是打开注册表路径,将其修改SpecialPollInterval键值为3600。

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\W32Time\TimeProviders\NtpClient]

Use PuTTY as Cygwin terminal

“Cygwin is a Linux-like environment for Windows.” This means, you can use linux/unix commandline tools like ls, grep and find on your Windows system. However, the default installation of Cygwin uses Windows’ default commandline terminal cmd.exe, which is not really handy. Fortunately, there’s a solution to use PuTTY as Cygwin terminal.

- Download and install Cygwin. The setup will download all needed packages, so make sure you check what you need (my main reason to install Cygwin was to have a Git client on Windows)

- Download PuTTYcyg and extract the contents of the archive anywhere on you hard drive

- Start

putty.exe, selectCygtermas connection type and enter-(dash) as command. Enter a session name (e.g.cygwin) in the text field belowSaved Sessionsand click onSave. - Create a shortcut to

putty.exe. Right click the shortcut, selectPropertiesand append the following string to the target field:-load "cygwin". Of course you have to replacecygwinwith the name of the session you saved in PuTTYcyg. - Open the shortcut and you should directly get into your Cygwin shell

让win7资源管理器默认打开计算机而不是库文件夹的方法

点击任务栏上的资源管理器图标,Windows 7默认打开那个库文件夹而不是以往的“计算机(我的电脑)”,这点一定让很多人感到很不方便,虽然可以将“计算机”固定到资源管理器的JumpList列表里,但总让人觉得别扭。

想恢复原来的方式?其实很简单,只需要在资源管理器指向的快捷方式稍作修改就可以达到目的。首先关闭所有的资源管理窗口,然后按住Shift并鼠标右击任务栏上的资源管理器图标,切换到“快捷方式”标签,在目标框里默认路径结尾加上一个空格和英文逗号,点击确定完成。

直接点击任务栏上的资源管理器图标,你会看到直接打开计算机目录了。

DOS常用命令

要想操作使用DOS,知道一些常用命令是非常重要的。以下是一些命令的用法。

文件名是由文件路径和文件名称合起来的,如C:\DOS\COMMAND.COM。

DIR 显示文件和文件夹(目录)。 用法:DIR [文件名] [选项]

它有很多选项,如/A表示显示所有文件(即包括带隐含和系统属性的文件),/S表示也显示子文件夹中的文件,/P表示分屏显示,/B表示只显示文件名,等等。 如 DIR A*.EXE /A /P 此命令分屏显示当前文件夹下所有以A开头后缀为EXE的文件(夹)。

CD或CHDIR 改变当前文件夹。 用法:CD [文件夹名] 若无文件夹名则显示当前路径。

MD或MKDIR 建立文件夹。 用法:MD 文件夹名

RD或RMDIR 删除文件夹。 用法:RD 文件夹名 注意:此文件夹必须是空的。

DEL或ERASE 删除文件。 用法:DEL/ERASE 文件名

COPY 拷贝文件。 用法: COPY 文件名1 [文件名2] [选项] 如 COPY /B A+B C 此命令将两个二进制文件A和B合为一个文件C。

TYPE 显示文件内容。 用法:TYPE 文件名

REN或RENAME 改变文件名,在DOS7中还可以改变文件夹名。 用法:REN 文件(夹)名1 文件(夹)名2

EDIT 编辑文件,在DOS7中还可以编辑二进制文件。 用法:EDIT [文件名] [选项] 如 EDIT /70 C:\COMMAND.COM 此命令以二进制方式编辑C:\COMMAND.COM文件。

FORMAT 格式化磁盘。 用法:FORMAT 驱动器 [选项]

它的选项很多,如/Q是快速格式化,/U表示无条件格式化(即无法使用UNFORMAT等命令恢复),/V指定磁盘的卷标名,等等。它还有许多未公开参数。

MEM 显示内存状态。 用法:MEM [选项]

它的选项也有不少,如/C可列出所有程序的内存占用,/D是显示驻留内存的程序及设备驱动程序的状态等详细信息,/F显示空闲的内存总量,/M显示内存中的模块信息,/P则是分屏显示。还有隐藏的/A选项,可以显示HMA信息。

MOVE 移动文件或文件夹,还可以更改文件或文件夹的名称。 用法:MOVE 文件[夹]1 文件[夹]2 如 MOVE C:*.EXE D: 此命令可以将C盘根文件夹下所有扩展名为EXE的文件移到D盘上。

XCOPY 复制文件或文件夹。 用法:XCOPY 文件[夹]名1 [文件[夹]名2] [选项]

它的选项非常多,如/S可拷贝整个文件夹(包括子文件夹)中的文件,/E指定包括空文件夹,/V表示复制完后检验复制出的文件的正确性,/Y表示确认,等等。

CLS 清除屏幕。 用法:CLS

SYS 传导系统,即将系统文件(如IO.SYS等)从一处传输到指定的驱动器中。 用法:SYS 文件夹名 [驱动器] 如 SYS C:\DOS A: 此命令即可将位于C:\DOS文件夹下的系统文件传输到A盘中。

DATE 显示或设置日期。 用法:DATE [日期]

TIME 显示或设置时间。 用法:TIME [时间]

DOS还自带一些其它的命令,如SORT(排序),FIND(寻找字符)等。

Windows 7 - MS-DOS Bootable Flash Drive - Create

Information

This will show you how to create a flash drive that is able to boot your computer into a MS-DOS environment. This will be accomplished by using the HP Flash Utility and the Windows 98 MS-DOS System Files

Warning

The flash drive being used in this process will be formatted. Please backup all of your data on the drive beforehand!

Here's How:

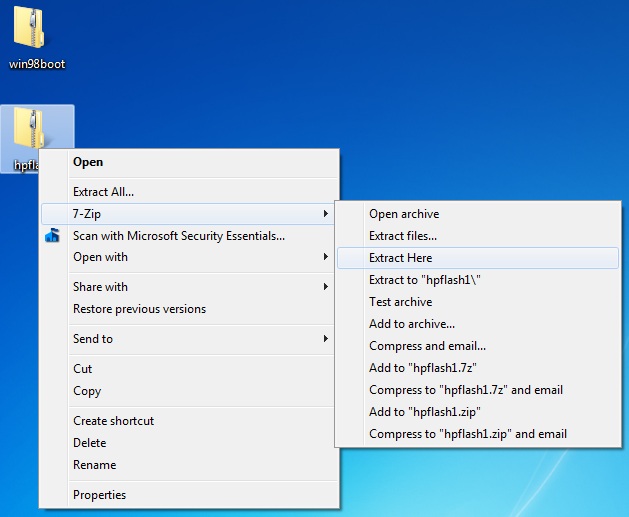

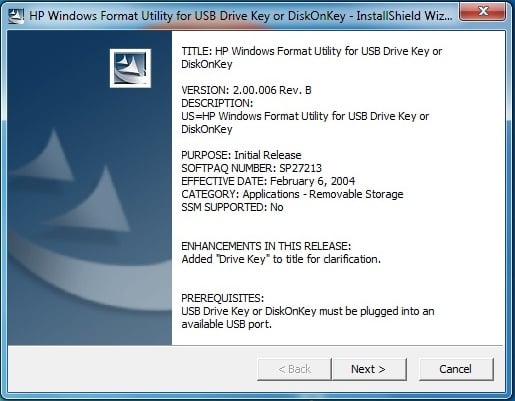

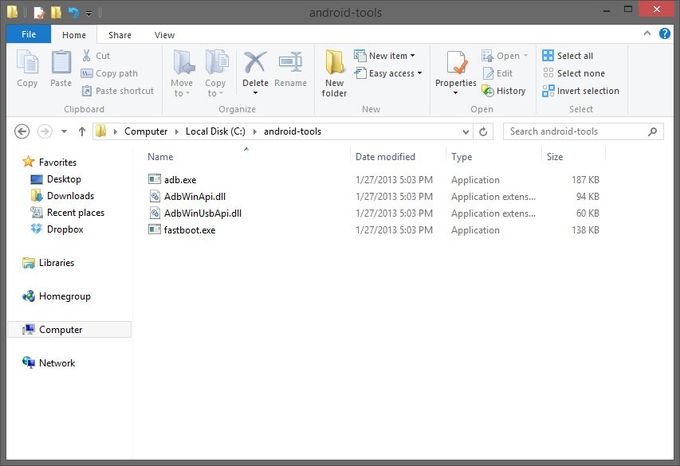

1. Download the HP Flash Utility hpflash1.zip and also download the Windows 98 MS-DOS System Files win98boot.zip. Extract hpflash1 to a location readily accessible

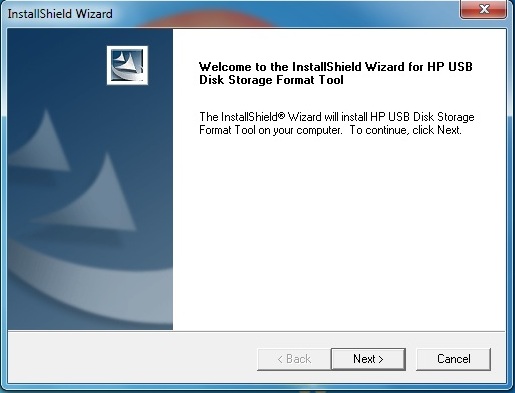

2. Run the installer and follow the simple on-screen instructions

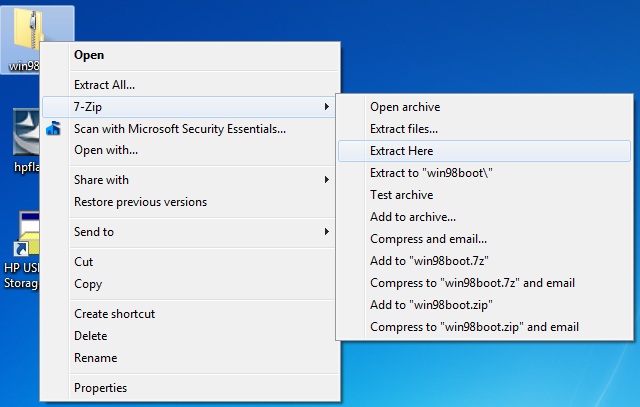

3. Extract the contents of "win98boot" to a location readily accessible

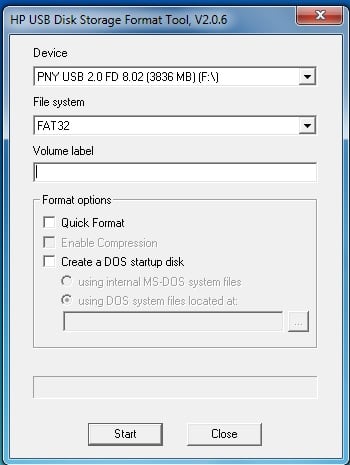

4. Run the HP USB Disk Storage Format Tool that was just installed. Choose your flash drive from the drop down list at the top. Also make sure that the file system is set to FAT32.

5. Under Format Options tick the " Create a DOS startup disk " option. Click the "..." button near the empty text box to browse to the location of where you extracted the Windows 98 MS-DOS System Files (see step 3 ).

Tip

Tick " Quick Format " under Format Options to speed the process up (if you don't want a comprehensive format). You may also want to give the drive a label by typing one in the Volume Label text field

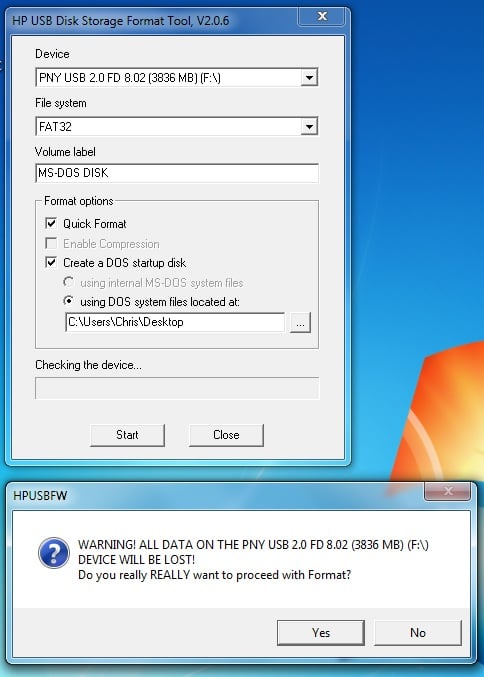

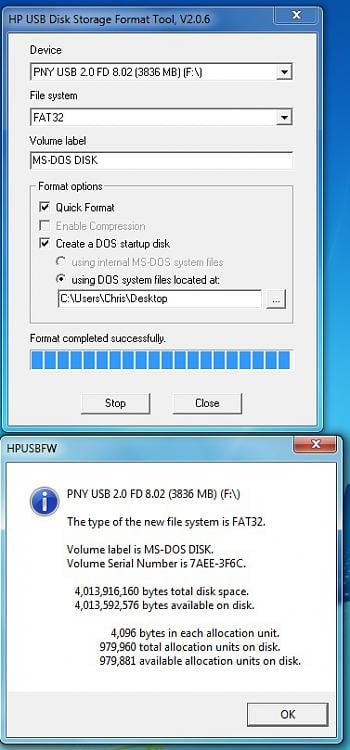

6. Click the "Start" button.

Warning

After you click the "Start" button , you will be given one final prompt that warns you that the flash drive selected will be formatted. This is your last chance to backup data on the drive; after you click yes it will be too late

Tip

You may remove the files extracted from the archive "win98boot", they are not needed anymore

_ Frequently Asked Questions_

Q: Why would a MS-DOS USB Drive be useful today? Wasn't that included in older Operating Systems?

A: MS-DOS was the underlying layer of the Windows 9x series of Operating Systems (Windows 1-ME) that was the "functionality" of the system. A MS-DOS bootable disc can be used to run many recovery tools (still used today) and also update the BIOS of the computer. If you are running an x64 system with no floppy drive and a manufactuer who only provides a 16-bit BIOS updater, this method is the only way to update your BIOS.

Q: I checked my flash drive after applying the steps above and it is empty? What gives?!

A: Unless the option to show protected operating system files is checked in folder options, Windows will hide these files due to their attributes matching that of system files. Don't worry, the relevant files are still there

Q: Alright I successfully completed the steps above, now what do I do?

A: Now you can download the tool you wanted to run (such as CHKDSK) or your BIOS update application (from the manufactuer) and place the files on to the root of the flash drive. You can find most tools by doing a simple google search

Tip

Tip

The Windows 98 MS-DOS System Files archive (win98boot) above has some system tools already included (ex. FORMAT, FDISK, SYS). Just drag and drop these files onto your flash drive

Q: My flash drive has been converted to the MS-DOS System Disc and I have some tools/BIOS revisions on the drive as well, how do I boot up MS-DOS?

A: This will largely depend on your model of computer but you will need to restart you computer with the flash drive plugged in and boot to the drive by means of a boot menu or by modifying your BIOS to boot to the flash drive first. Consult the manual of your computer or the respective manufactuer's website for further details.

Q: I have no further need for the MS-DOS System Disc, how can I remove it from my flash drive?

A: You may format the flash drive with the built in Windows format utility or you may format it by using the HP USB Disk Storage Format Tool that was used above.

Hope it helps,

Chris

How to suspend/hibernate windows 7 from command line

Shutdown Computer

Shutdown.exe -s -t 00

Restart Computer

Shutdown.exe -r -t 00

Lock Workstation

Rundll32.exe User32.dll,LockWorkStation

Hibernate Computer

rundll32.exe PowrProf.dll,SetSuspendState

Sleep Computer

rundll32.exe powrprof.dll,SetSuspendState 0,1,0

The command rundll32.exe powrprof.dll,SetSuspendState 0,1,0 for sleep is correct - however, it will hibernate instead of sleep if you don't turn the hibernation off.

Here's how to do that:

Go to the Start Menu and open an elevated Command Prompt by typing cmd.exe, right clicking and choosing Run as administrator. Type the following command:

powercfg -hibernate off

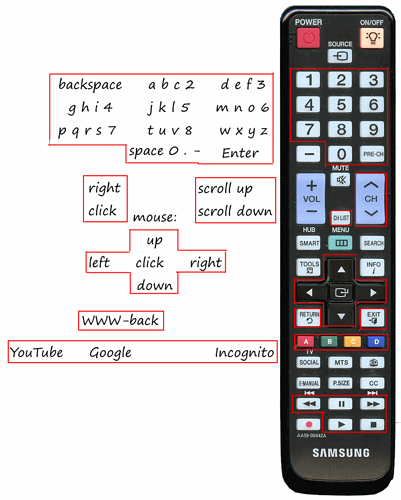

键盘多媒体键的一些研究心得

前言 最近对键盘的多媒体键产生了兴趣,研究了一些心得,特此记录下来与大家分享。

本文共分为以下四个部分: ² 多媒体键简介 ² 定制多媒体键 ² 定制键盘任意键 ² 改造普通键盘硬件,增加多媒体键

多媒体键简介

目前最常用的标准键盘是104键键盘,是在IBM定义的101键键盘标准上增加了两个Windows徽标键和一个右键菜单键而成,俗称Win95键盘。此类键盘一直沿用IBM标准,采用行列矩阵方式布局排列,称为扫描矩阵,扫描矩阵定义为8行×16列=128键。对于104键键盘而言,还有24个闲置键位未定义。107键键盘就是从这些闲置键位中选择了三个定义为“Power”、“Sleep”和“Wake UP”。后来微软又增加了18个键定义,用于完成音量调整、播放/暂停、打开浏览器等功能,相关文档参见:http://www.microsoft.com/whdc/archive/w2kbd.mspx。为了方便,本文中我们将这18个键统称为多媒体键,各键功能详见下表:

| 序号 | 键名 | 功能 | 注册表分支名称 |

|---|---|---|---|

| 1 | Volume UP | 音量提高 | |

| 2 | Volume Down | 音量降低 | |

| 3 | Mute | 静音 | |

| 4 | Play/Pause | 播放/暂停 | |

| 5 | Stop | 停止 | |

| 6 | Scan Previous Track | 上一曲 | |

| 7 | Scan Next Track | 下一曲 | |

| 8 | WWW Back | IE浏览器后退 | 1 |

| 9 | WWW Forward | IE浏览器前进 | 2 |

| 10 | WWW Refresh | IE浏览器刷新 | 3 |

| 11 | WWW Stop | IE浏览器停止 | 4 |

| 12 | WWW Search | IE浏览器搜索 | 5 |

| 13 | WWW Favorites | IE浏览器收藏夹 | 6 |

| 14 | WWW Home | IE浏览器首页 | 7 |

| 15 | 邮件 | 15 | |

| 16 | Media Select | 媒体选择(播放器) | 16 |

| 17 | My Computer | 我的电脑 | 17 |

| 18 | Calculator | 计算器 | 18 |

微软、罗技等一些厂家都推出过各式各样的带有多媒体键的键盘,有音量调节、播放、停止、计算器、复制、粘贴、备份、还原等多种功能,但有相当一部分各类的键盘需要另行安装驱动程序或应用程序。本文所讨论的多媒体键盘,仅指在XP/Vista/Windows7等操作系统下只使用系统自带的驱动程序和HID Input Service 服务,多媒体键即可生效的键盘,如微软精巧500/600、DELL 8135等,这些键盘使用的驱动程序名称为HID Keyboard Device(USB接口键盘)或“标准 101/102键盘或microsoft 自然 PS/2 键盘”(PS/2接口键盘)。 另:按照微软提供的USB/PS2键盘扫描码对应表,USB键盘比PS/2键盘要多出一些键位定义,如:复制、粘贴、撤消等。因条件所限没有进一步研究。

定制多媒体键

多媒体键虽然有18个之多,但并不一样都是用户想要的功能。比如说我可能更需要一键打开记事本而不是计算器;按下“我的电脑”键我希望能运行的是Total Commander。微软考虑到了用户这个需求,提供了两种解决方案:一是安装微软提供的IntelliType Pro驱动工具,使用常驻内存的程序来控制各个键的定义。二是在注册表中提供了部分键的自定义功能,允许定制上表中8至18号键位功能。微软也提供了个工具TweakUI可以修改这些键的定义保存至注册表,但修改的内容有限。使用第三方驱动还要有常驻内存的程序,我个人不是很感冒。在这里我们详细了解一下注册表修改这种方法。

例一:修改Calculator计算器键,把它的定义改为打开记事本。只须将如下内容保存为notepad.reg,双击导入注册表即可:

代码:

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Explorer\AppKey\18]

"ShellExecute"="notepad.exe"

如果要改为打开默认的邮件客户端,可以将上面的"ShellExecute"="calc.exe"一行改为:

代码:

"Association"="mailto"

或者:

代码:

"RegisteredApp"="mail"

例二:修改My Computer我的电脑键,将其改为运行Total Commander。需要导入如下注册表项目:

代码:

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Explorer\AppKey\17]

"ShellExecute"=" D:\\Program Files\\TotalCmd\\TOTALCMD.EXE"

(Total Commander实际路径需要根据实际情况修改)

在以上两例中:

代码:

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Explorer\AppKey]

和

代码:

[HKEY_CURRENT_USER \SOFTWARE\Microsoft\Windows\CurrentVersion\Explorer\AppKey]

这两个主键下提供了对多媒体键定义功能,两个主键的区别是作用范围是全体用户还是当前用户。也就是说如果多人共用一台计算机,不同的账户下同一个键可以设定不同的功能。 每个多媒体键都有一个数字编号的分支,具体编号对应情况见上表。 键名有ShellExecute、Assocication和RegisteredApp三种类型可选: ShellExecute 是执行一个外部程序,在键值中指定要执行的程序名称即可。 Assocication是指定关联,可以将键值设定为一个扩展名如“.rar”,将调用Winrar程序;也可以指定一个协议,http即调用默认的http浏览器,mailto则是调用默认的邮件客户端。 [size=2]RegisteredApp是指定已注册的默认程序,键值可以设定为mail、news、Calendar、Contacts、Media[font=宋体]等,分别代表默认的邮件客户端、新闻组程序、日历、联系人、媒体播放器等。具体在注册表中的位置如下:

代码:

[HKEY_LOCAL_MACHINE\SOFTWARE\Clients]

定制键盘任意键

有人可能会问:我的键盘上只有表中1~7号多媒体键,或者根本就没有任何多多媒体键,有没有办法将某些键定义成打开指定程序或者其它多媒体键呢?回答是肯定的,那就是利用微软提供的扫描码映射(Scan Code Mapper)功能,详细资料参见:

http://www.microsoft.com/whdc/archive/w2kscan-map.mspx 。 实际上这是个很古老的技术了,KeyTweak、RemapKey、KeybMap等软件都是利用了这项技术通过修改注册表来完成键盘值转义功能。下面我们手动操作,将Scroll Lock 键改为打开记事本功能: 1、 先将Scroll Lock 键映射为Calculator键,即打开计算器。导入如下注册表项目:

代码:

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Keyboard Layout]

"Scancode Map"=hex:00,00,00,00,00,00,00,00,02,00,00,00,21,e0,46,00,00,00,00,00

2、 重启计算机,此时按下Scroll Lock 键,可以打开计算器。 3、 按照前面介绍的方法,再将Calculator键的定义修改为打开记事本即可。 修改1~7号无法定制功能的多媒体键方法也是一样的。 注意:Power、Sleep、Wake Up 这三个电源键有些特殊,它们也可以映射到其它键,但必须先从控制面板-电源选项中将Power和Sleep的功能设定为“不采取任何操作”。另外在USB键盘上,这三个键映射无效,若是通过转换口连接到PS/2接口上,则是可以使用的。 为了减化操作,我升级了KeybMap这个工具,增加了对多媒体键的自定义功能,可以很方便地实现以上功能。有兴趣的朋友可以下载使用。下载地址: http://www.mympc.org/down/1/2005-11-26_0111998067.html

改造普通键盘硬件,增加多媒体键

这时又有朋友问了:我用的是标准104键键盘,不想修改现有的键定义,能不能增加几个多媒体键?回答仍然是可行的!不过需要你有一定的DIY能力,能够熟练的使用电烙铁等工具。

前面我们提到过现在通用的键盘都是使用了IBM定义的矩阵,一共有8行×16列=128个键位,空闲了24个键位。而电源键和多媒体键都是利用了这些空闲键位。由此想来现在常用的键盘控制器芯片一定可以完整处理这128个键,厂家没有必要为普通104键键盘和多媒体键盘开发不同的键盘控制器芯片,更没有必要在普通104键键盘上屏蔽那多余的24个键位处理能力。基于以上理由,我们推测市场上最常见的104键键盘能够支持多媒体键,只是没有做出相应的键位而已。 下面就来验证一下我们的推测:拆开一块普通的104键键盘,找到线路板,可以看到上面有与键盘薄膜电路相连的金手指触点,这些触点通过键盘薄膜电路两两组合,从而得到了不同的按键。因为这一部分电路没有统一的标准,我只能用穷举法来一个个测试:找一根导线按顺序短接不同的触点。经过一段时间的测试,我果然找到了静音、音量增大、音量减小、计算器、我的电脑等热键;不幸的是在测试的过程中我也找到了电源键,于是系统就自动地关机了……不管怎么说,试验是成功了。 我先后在IBM、联想、浪潮、清华同方等四块不同品牌计算机的键盘上做过以上试验,不管是PS/2接口还是USB接口的键盘,都是成功的。 下面要做的就是去电子市场买一些轻触开关,想办法固定在键盘的空闲的地方,找到所需的键位组合,用导线焊好就可以了。当然,要想做得美观,需要你有较强的动手能力。 也许费了这么大功夫,改造一块不到一百元的键盘纯属浪费精力,但是对我们DIY爱好者来说,结果并不是目的,探索的过程中才是我们最大的乐趣。我们口号是:生命在于折腾……

140个绝对绝对值得收藏的电脑技巧

- 重装Windows XP不需再激活

如果你需要重装Windows XP,通常必须重新激活。事实上只要在第一次激活时,备份好Windows\System32目录中的Wpa.dbl文件,就不用再进行激活的工作了。在重装Windows XP后,只需要复制该文件到上面的目录即可。

- 如何知道自己的Windows XP是否已激活

打开开始→运行,在弹出的对话框中输入:oobe/msoobe /a,回车后系统会弹出窗口告诉你系统是否已经激活。

- 关闭zip文件夹功能

你是不是觉得Windows XP中的zip文件夹功能太慢,功能也不吸引人?如果是这样,你可以打开开始→运行,在弹出的对话框中输入:regsvr32 /u zipfldr.dll,回车后即可关闭ZIP文件夹功能。

4.让Windows XP也能刻ISO文件

Windows XP没有提供直接刻录ISO文件的功能,不过你可以下载一个第三方插件来为系统增加这个功能。该插件的下载地址为:http://members.home.net/alexfein/is...rderSetup.msi

- 登陆界面背景变变色

打开注册表编辑器,找到[HKEY-USERS\.DEFAULT\Control Panel\Colors],将Background的值改为“0 0 0”(不带引号),这样登录背景就成了黑色。

- 完全卸载XP

有些朋友在安装Windows XP后发现自己并不喜欢这个操作系统,这时你可以用Windows 98安装光盘启动到DOS状态,然后键入: format -ur 这样可以删除所有XP的文件,并可重新安装Windows 98/Me。

- 系统救命有稻草

当怀疑系统重新启动之前针对计算机所进行的更改可能导致故障,可以在启动系统时按F8键,选择“最后一次正确的配置”来恢复对系统所做的更改。

- 恢复硬件以前的驱动程序

在安装了新的硬件驱动程序后发现系统不稳定或硬件无法工作时,只需在“设备管理器”中选择“驱动程序恢复”按钮,即可恢复到先前正常的系统状态。但不能恢复打印机的驱动程序。

- 自动登陆

单击开始→运行,输入“rundll32 netplwiz.dll, UsersRunDll”(不带引号),然后在User Accounts中取消“Users must enter a user name and password to use this computer”,单击“OK”,接着在弹出的对话框中输入你想自己登陆的用户名和密码即可。

- 快速关机或重启的快捷键(可定时)

在桌面点击鼠标右键,选择新建(快捷方式,在弹出的向导中输入位置为:C:\WINDOWS\system32\shutdown.exe -s -t 10(其中的-s可以改为-r,也就是重新启动,而-t 10表示延迟10秒,你可以根据自己的需要更改这个数字)。制作完这个快捷键后,按照上面的方法为它指定一个图标。

这个快速关机或重启的技巧会显示一个比较漂亮的对话框提示关机(重启),而且你可以在后面加上“-c "我要关机啦!"”,这样这句话会显示在对话框中,当然文字你可以随意写,但要注意不能超过127个字符。

- 关机、重启只要1秒钟

如果你想让Windows XP瞬间关机,那么可以按下CTRL+ALT+DEL,接着在弹出的任务管理器中点击“关机”→“关机”,与此同时按住CTRL,不到1秒钟你会发现系统 已经关闭啦,简直就在眨眼之间。同样道理,如果在“关机”菜单中选择“重启”,即可快速重启。

- 寻找丢失的快速启动栏

把鼠标移到任务栏的空白区域,单击右键从弹出的菜单中选择“属性”,在弹出的窗口中选择“任务栏”选项卡,再从“任务栏外观”框中把“显示快速启动”的复选框选中,“确定”就行了。

- 批量文件重命名

Windows XP提供了批量重命名文件的功能,在资源管理器中选择几个文件,接着按F2键,然后重命名这些文件中的一个,这样所有被选择的文件将会被重命名为新的文件名(在末尾处加上递增的数字)。

- 快速锁定计算机

在桌面上单击鼠标右键,在随后出现的快捷菜单上选择新建“快捷方式”,接着系统便会启动创建快捷方式向导,在文本框中输 “rundll32.exe user32.dll,LockWorkStation”,点击“下一步”,在弹出的窗口中输入快捷方式的名称,点击“完成”即可。当然最简单的锁定计算 机的方法是直接按WinKey + L。

- 让双键鼠标具有滚页功能

在控制面板中双击“鼠标”项,在弹出的“鼠标属性”对话框中选择“单击锁定” 栏中的“启动单击锁定”项。再点击“设置”按钮,在弹出的“单击锁定的设置”对话框中将鼠标设为最短,点击“确定”保存。打开一个网页,点击网页的卷轴, 在离网页卷轴不远处上下移动鼠标,即可实现此功能。再次点击网页的卷轴即可关闭此功能。

- 让Windows XP读英文字母

找到“辅助工具”组里的“讲述人”程序,点击“声音”按钮,进入“声音设置”界面进行一番设置然后保存即可。当你把鼠标指向带有英文字母的对话框、菜单以及按钮时,会听见一个男声读着英文

-

恢复Windows经典界面 很多人安装了Windows XP后的第一感觉就是Windows变得漂亮极了。只是可惜美丽的代价要耗掉我们本就不富裕的内存和显存。要想恢复到和经典Windows类似的界面和使 用习惯,请在桌面上单击鼠标右键,选择“属性”命令即可进入“显示属性”的设置窗口。这里共有“主题”、“桌面”、“屏幕保护程序”、“外观”和“设置” 五个选项卡。在当前选项卡的标签上会有一条黄色的亮色突出显示,默认是“主题”选项卡,这里我们只要在“主题”的下拉选单里选择“Windows经典”, 立即就可以在预览窗口看到显示效果,同时,外观选项卡的内容也会随之进行更改。注意:Windows XP中“主题”和“外观”选项卡是息息相关的,只要更改其中的任何一个选项,在另一个选项卡中也会看到相应的改变。

-

恢复“开始”菜单 Windows XP新的“开始”菜单还是挺方便的,对系统资源的影响也不大。如果你喜欢过去的经典菜单的话,用鼠标右键单击任务栏空白处,选择“属性”菜单,进入“任务 栏和开始菜单属性”的设置窗口,选择“[开始]菜单”选项卡,选择“经典[开始]菜单”即可恢复到从前的模样了。

-

优化视觉效果 Windows XP的操用界面的确是很好看,好看的背后是以消耗大量内存作为代价的,相对于速度和美观而言,我们还是宁愿选择前者,右键单击“我的电脑”,点击“属性/ 高级”,在“性能”一栏中,点击“设置/视觉效果”,在这里可以看到外观的所有设置,可以手工去掉一些不需要的功能。在这里把所有特殊的外观设置诸如淡入 淡出、平滑滚动、滑动打开等所有视觉效果都关闭掉,我们就可以省下“一大笔”内存。

-

禁用多余的服务组件 Windows XP和Windows 2000一样可以作为诸如Http服务器、邮件服务器、FTP服务器,所以每当Windows XP启动时,随之也启动了许多服务,有很多服务对于我们这些普通用户来说是完全没用的,所以关掉它们是一个很好的选择。操作的方法是:右键单击“我的电脑 ”,依次选择“管理/服务和应用程序/服务”,将不需要的服务组件禁用。注意:有些服务是Windows XP必需的,关闭后会造系统崩溃。查看详细说明确认后再禁止。编者注:对于Windows XP常见的90个服务,本文将有专门章节分析。

-

彻底隐藏文件 每个人的机器上都或多或少有一点不愿意别人看见的东西,怎么办,直接隐藏起来吗?一打开显示隐藏文件就什么都看见了,其实你只要在 HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersionexplorer \Advanced\Folder\Hidden\SHOWALL下, DWORD值Checkedvalue设为0(如果没有这一项可新建一个),这样当有非法用户开启显示隐藏文件功能后,你的文件也不会被显示出来。

-

添加“显示桌面”项 在默认安装下,我们在任务栏中找不到熟悉的 “显示桌面”按钮,难道Windows XP中没有这个非常好的功能了吗?其实不是,你依次单击“开始/设置/控制面板/任务栏和开始菜单”,在“任务栏和开始菜单属性”窗口中,将“显示快速启 动”选项打上勾,“显示桌面”项就会出现在任务栏中了。

-

关闭华医生Dr.Watson: 在"开始"->"运行"中输入"drwtsn32"命令,或者"开始"->"程序"->"附件"->"系统工具"->"系 统信息"->"工具"->"Dr Watson",调出系统里的华医生Dr.Watson ,只保留"转储全部线程上下文"选项,否则一旦程序出错,硬盘会读很久,并占用大量空间。如以前有此情况,请查找user.dmp文件,删除后可节省几十 MB空间。

Freeware

amarafssb.zip 这是一个电子相册制作软件。

How to Access Metro Style Apps Installation Folder in Windows 8

Edited by Zia Zafari, Eric Shiels, Amandabl, BR and 8 others

Windows 8 contains dozens of built-in Metro style apps. These apps run almost perfectly but, if you are a developer or regular user and want to change the core files of these app you need to go to the installation files of those apps in-order to change something. Seems simple! But, it is not. You don’t have access by default to go to the applications folder and you are denied access to that folder by Windows.

Navigate to the installation directory of Windows 8 (It should be C: \)

3.

Open up the ‘program files’ folder

4.

Now, you should see an applications folder named 'WindowsApps'. If not then navigate to the ‘View’ menu and mark the ‘hidden items’ option

* Your applications folder should be visible now.

5.

Open up the applications folder

* You would be denied access to the folder

6.

Click ‘continue’

* Again, you would be told ‘you have been denied permission to access this folder’

7.

Click ‘security tab’. It would be underlined

* A pop-up dialog would open showing different options in the ‘security tab’

8.

Click ‘Advanced’

* Another pop-up dialog box would open. Click ‘change’ against the ‘owner’

* Another pop-up dialog box would open.

9.

Now, there you need to write down the ‘Username’ or ‘Windows Live ID’ in the text box named as ‘enter the object name to select’, which you use to log-in to Windows 8

10.

After writing the name, click ‘Check Names’

* Your ID would be identified and if found correct you full name would be displayed along with your email in the text box.

11.

Click ‘ok’

* In the next window again click ‘ok’

12. Keep clicking ‘ok’ unless/until you reach the error message that you ‘have been denied access’

13.

Click ‘close’

* You would be taken again to the ‘program files’ folder.

14.

Open up the applications folder and you would be asked for permission again

15.

Click ‘continue’ * Your applications folder would open.

Finding which cygwin package contains a particular file

I often need to install a cygwin package to get a single file, but I can't find which package I need. This article explains how.

The other day I needed to install the cygwin "strings" command. No problem, I'll just run the cygwin installer, grab the "strings" package, and I'll be all set. But not so fast. There is no "strings" package; "strings" is part of some other package. But which one? I finally stumbled upon the cygwin package-grep facility. By using the URL:

http://cygwin.com/cgi-bin2/package-grep.cgi?grep=strings.exe

I was able to determine that strings.exe is in the "binutils" package. A few minutes later, I was happily using the "strings" command.

I haven't found a user interface to package-grep, so I just build the URL by hand.

Windows 7 使用 IPv6 翻墙

Vista/Win7的方法是鼠标右键点击“开始->程序->附件->命令提示符”,选择“以管理员身份运行”。 在新开启的【命令提示符】窗口中执行以下两条命令:

netsh interface ipv6 isatap set router isatap.sjtu.edu.cn

netsh interface ipv6 isatap set state enabled

替换 C:\Windows\System32\drivers\etc\hosts为附件中的内容。

Open new instance for each excel sheet

This worked for Windows 7, excel 2010:

In Windows 7 you have to edit the registry to remove DDE completely. You can first try checking the "Ignore other applications that use Dynamic Data Exchange (DDE)" box in Excel -> Excel Options -> Advanced. This alone might work for some – but generally it just results in an error message.

So the more comprehensive way is to:

Open regedit, browse to HKEY_CLASSES_ROOT\Excel.Sheet.8\shell\Open

Delete the ddeexec key, (or just rename it)

Then click on the "command" key and replace the /e on the end of the (Default) and command strings with "%1"

Quotes around %1 are important.

After the change, the lines should look like this:

(Default) REG_SZ "C:\Program Files (x86)\Microsoft Office\Office14\EXCEL.EXE" "%1"

command REG_MULTI_SZ xb'BV5!!!!!!!!!MKKSkEXCELFiles>VijqBof(Y8'w!FId1gLQ "%1"

Then do the same for Excel.Sheet.12

Now Both .xls and .xlsx should open in new windows with no errors.

Fix: “The Selected File Is Not A Valid ISO File” Error In Windows 7 USB/DVD Download Tool

These days, a large number of PC users prefer installing Windows OS from a USB drive instead of traditional DVD disc. Even though there are plenty of better tools out there like Rufus to create bootable USB from an ISO file, most PC users prefer using the official Windows 7 USB/DVD Download Tool.

Windows 7 USB/DVD Download Tool sports an easy-to-use interface and is compatible with Windows 8 and 8.1 as well, but at times when you open Windows ISO file by clicking the Browse button, the tool shows “The selected file is not a valid ISO file. Please select a valid ISO file and try again” error.

While I am no expert, the error occurs when the selected ISO file has only ISO9660 file system, and missing UDF and Joliet. In order to fix this error, you need to re-build the ISO file with ISO9660 + UDF + Joliet file system.

A quick Google search reveals that there are plenty of guides out there to fix this error but the catch is that all of them ask you download PowerISO or UltraISO software. The real catch is that both UltraISO and PowerISO aren’t free and you need to purchase their licenses. And no, the trail software can’t handle large ISO files.

Users who want to fix “The selected file is not a valid ISO file. Please select a valid ISO file and try again” error with the help of a free software can follow the given below instructions.

Method 1:

Step 1: Download ImgBurn software from here and install the same on your PC. As some of you know, ImgBurn is a free software and is compatible with all recent versions of Windows, both 32-bit and 64-bit systems.

Step 2: Launch ImgBurn, click Create image file from files/folder. Drag and drop the ISO file to ImgBurn window to add it to the source list.

Step 3: Select a location to save the new ISO file by clicking the Browse button next to Destination box.

Step 4: Click on the Options tab on the right-side pane of ImgBurn and select the file system as ISO9660 + Joliet + UDF from the drop-down menu.

Step 5: Finally, click the Build button (see picture) to begin saving the edited ISO file with new file system. Click Yes button when you see the confirmation dialog and click Yes button again if you see confirm Volume Label dialog box, and finally, click OK button to begin saving the ISO file.

Once the job is done, you can run Windows 7 USB/DVD Download Tool again and browse to the newly created ISO file to prepare the bootable USB/DVD without any issues.

Method 2:

If “The selected file is not a valid ISO file. Please select a valid ISO file and try again” error is appearing even after following the above mentioned workaround, we suggest you go ahead and download the popular Rufus tool and then follow the simple instructions in how to install Windows 8.1 from bootable USB guide to create the bootable media without any errors.

Clear Case

Rational ClearCase is a software tool for revision control (e.g. configuration management, SCM) of source code and other software development assets. It is developed by the Rational Software division of IBM. ClearCase forms the base of version control for many large and medium sized businesses and can handle projects with hundreds or thousands of developers.

Rational supports two types of SCM configurations, UCM, and base ClearCase. UCM provides an out-of-the-box SCM configuration while base ClearCase supplies all the basic tools to make it very configurable and flexible. Both can be configured to support a wide variety of SCM needs.

ClearCase can run on a number of platforms including Linux, HP-UX, Solaris and Windows. It can handle large binary files, large numbers of files, and large repository sizes. It handles branching, labeling, and versioning of directories.

用NTLoader来引导linux

我本人并不喜欢将grub安装到mbr,毕竟windows才是我主要用的系统,重装可能性比较大.grub安装到mbr会被重写,带来一些不必要要的麻烦.但写下重装到mbr的方法,以免误操作.

恢复被windows破坏的grub.

如果你用grub来引导linux和windows,当windows出毛病重新安装后,会破坏MBR中的grub,这时需要恢复grub.

-

把linux安装光盘的第一张放到光驱,然后重新启动机器,在BOIS中把系统用光驱来引导。

-

等安装界面出来后,按[F4]键,也就是linux rescue模式。

-

一系列键盘以及几项简单的配制,过后就[继续]了。。。这个过程,我不说了,比较简单。

-

然后会出现这样的提示符: sh#

-

我们就可以操作GRUB了.输入grub: sh#grub 会出现这样的提示符: grub> 我们就可以在这样的字符后面,输入:

grub>root (hdX,Y)

grub>setup (hd0)

如果成功会有一个successful...... 这里的X,如果是一个盘,就是0,如果你所安装的linux的根分区在第二个硬盘上,那X就是1了;Y,就是装有linux系统所在的根分区。 setup (hd0)就是把GRUB写到硬盘的MBR上。

用NTLoader来引导linux.

如果你在安装linux时没有选择安装grub,不必着急,现在我们来看看如何在安装linux后安装grub.并用windows的NTLoader来引导linux.

-

安装grub 我用的grub是Redhat8.0带的grub安装包: grub-0.92-7.rpm 安装: rpm -ivh grub-0.92-7.rpm 其他安装方式也一样,只要你安装上grub就行了.RH8缺省用的grub, 1,2步骤可以省了.

-

建立grub的环境 cp /usr/share/grub/i386-pc/* /boot/grub

-

生成grub的配置文件/boot/grub/menu.lst 注意了, 这里我的linux在/dev/hda4,所以menu.lst那些分区位置为(hd0,3), 你的可能不一样了,不能完全照着"画瓢"噢! 下面第3步install的中的分区位置也应该和你的系统一致.

-

安装grub至Linux分区boot 将grub的stage1安装到/dev/hda4的boot扇区(hd0,3). 过程如下: /sbin/grub (运行grub)

grub> **install (hd0,3)/boot/grub/stage1 d (hd0,3) (hd0,3)/boot/grub/stage2 p (hd0,3)/boot/grub/menu.lst **

(注意,上面"grub>"为grub的提示符,其后内容写在一行上.)

- 取得grub的boot信息 过程如下:

dd if=/dev/hda4 of=/grub.lnx bs=512 count=1

这样得到grub的引导信息,只要用NT Loader来加载它就行了.

-

将上面得到的grub.lnx弄到Windows的C盘根目录下 可以先把grub.lnx弄得软盘上,然后启动windows,拷贝到C:; 情况允许也可以直接在Linux下拷贝到C:了. 我的C盘(即设备/dev/hda1)为FAT32, 可以直接从Linux下弄过去了. 如下: mount -t vfat /dev/hda1 /mnt/c cp /grub.lnx /mnt/c umount /mnt/c

-

修改NT Loader的boot.ini 在其中加入一行: C:\grub.lnx="Redhat Linux - GRUB" 加入后boot.ini的内容如下:

[boot loader]

timeout=15

default=C:\boot.lnx

[operating systems]

multi(0)disk(0)rdisk(0)partition(1)\WINDOWS="Microsoft Windows XP Professional" /fastdetect

[VGA mode]" /

basevideo /sos

C:\grub.lnx="Redhat Linux - GRUB"

OK. 可以用NT Loader加载Linux了, 其实上面过程基本上和用NT Loader加载LILO一样.其基本思想就是用NT Loader来加载LILO或grub的引导区(grub.lnx), 其中的关键就是LILO或grub的引导区的获取

How did I enable Alcor Micro Smart Card reader in Virtualbox

SYSTEM:

Host:

Software: Windows 7 64bit.

Hardware: HP Elitebook 8460P Notebook PC

Virtualbox: 4.3.0

Guest:

Windows XP

Target:

Make the built-in Alcor Micro smart card reader work on the guest OS.

HOW:

- on Host,

C:\Program Files\Oracle\VirtualBox>VBoxManage list usbhost

Host USB Devices:

UUID: 9edb62ed-aaf9-403b-97e6-ab69bea836cc

VendorId: 0x03f0 (03F0)

ProductId: 0x3a1d (3A1D)

Revision: 0.0 (0000)

Port: 0

USB version/speed: 2/2

Address: {36fc9e60-c465-11cf-8056-444553540000}\0009

Current State: Captured

UUID: c2a753e9-662e-42f2-8090-c60d060b65e5

VendorId: 0x046d (046D)

ProductId: 0xc062 (C062)

Revision: 49.0 (4900)

Port: 0

USB version/speed: 2/2

Manufacturer: Logitech

Product: USB Laser Mouse

Address: {745a17a0-74d3-11d0-b6fe-00a0c90f57da}\0040

Current State: Busy

UUID: 86a32467-2968-4a07-abb0-23bf09b700e6

VendorId: 0x046d (046D)

ProductId: 0xc31d (C31D)

Revision: 102.1 (10201)

Port: 0

USB version/speed: 1/1

Manufacturer: Logitech

Product: USB Keyboard

Address: {36fc9e60-c465-11cf-8056-444553540000}\0040

Current State: Busy

UUID: 790e38b5-b41f-4c71-a3cd-12dc3111a174

VendorId: 0x0529 (0529)

ProductId: 0x0001 (0001)

Revision: 1.0 (0100)

Port: 0

USB version/speed: 1/1

Manufacturer: AKS

Product: Hardlock USB 1.12

Address: {36fc9e60-c465-11cf-8056-444553540000}\0047

Current State: Busy

UUID: 5ca23bce-a86c-4d46-b053-738eed5ae0f9

VendorId: 0x058f (058F)

ProductId: 0x9540 (9540)

Revision: 1.32 (0132)

Port: 0

USB version/speed: 1/1

Manufacturer: Generic

Product: EMV Smartcard Reader

Address: {50dd5230-ba8a-11d1-bf5d-0000f805f530}\0000

Current State: Busy

UUID: 528b875a-6518-484c-9fd9-287b47a6cab4

VendorId: 0x138a (138A)

ProductId: 0x003c (003C)

Revision: 0.134 (00134)

Port: 0

USB version/speed: 1/1

Address: {53d29ef7-377c-4d14-864b-eb3a85769359}\0000

Current State: Busy

UUID: ff4be7d3-6719-42da-87a2-d8523fe941cc

VendorId: 0x1bcf (1BCF)

ProductId: 0x2888 (2888)

Revision: 3.4 (0304)

Port: 0

USB version/speed: 2/2

Manufacturer: 6047B0021702A0117K8SY

Product: HP HD Webcam [Fixed]

Address: {36fc9e60-c465-11cf-8056-444553540000}\0008

Current State: Busy

The vendor ID and product ID can be verified by Device Manager, detailed information of Alcor Micro Smart Card Reader.

2. On Virtualbox, change setting of the guest. USB -> Add filters --> Choose device 0580:9540. Check the box and start the guest OS.

3. When the guest is started, it will indicate new hardware is found. Installing the driver automatically ends up with a failure.

4. Download the driver from HP's website manually.

Choose Alcor Micro Smart Card Reader Driver (International)

http://ftp.hp.com/pub/softpaq/sp63501-64000/sp63565.exe

5. Install and reboot, Done!

How to disable ads on Skype

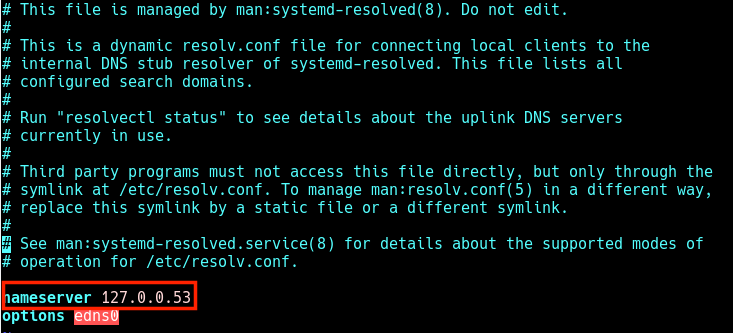

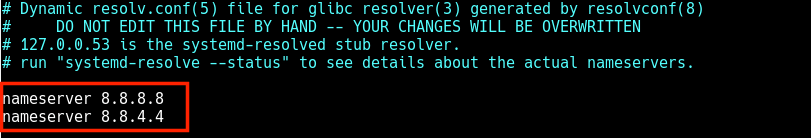

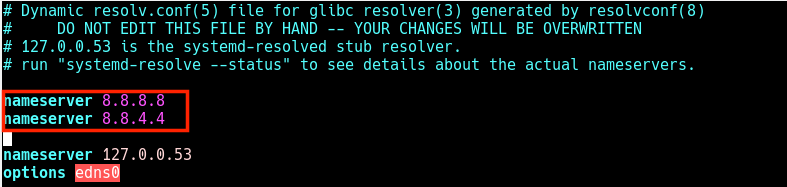

You need to add entries to your hosts file, typically located here:C:\Windows\System32\drivers\etc\hosts

These are hostnames you'll want to block, by adding them to the hosts file:

127.0.0.1 rad.msn.com

127.0.0.1 g.msn.com

127.0.0.1 live.rads.msn.com

127.0.0.1 ads1.msn.com

127.0.0.1 static.2mdn.net

127.0.0.1 ads2.msads.net

127.0.0.1 a.ads2.msads.net

127.0.0.1 b.ads2.msads.net

127.0.0.1 ad.doubleclick.net

127.0.0.1 ac3.msn.com

127.0.0.1 ec.atdmt.com

127.0.0.1 msntest.serving-sys.com

127.0.0.1 sO.2mdn.net

127.0.0.1 aka-cdn-ns.adtech.de

127.0.0.1 secure.flashtalking.com

127.0.0.1 cdn.atdmt.com

Source of hostnames here: wikiHow, and Skype forum. Just a warning, but Microsoft Security Essentials (MSE) may think your hosts file was hijacked, so if you have issues make sure to allow the changes through MSE.

Disk Usage Tool

Scanner: http://www.steffengerlach.de/freeware/

Open excel in new window instance (very useful when you have dual monitors)

Excel can be boring, especial when you have two monitors and want to compare two different files side by side. That's because all files are opened in the same window by default. If you want to open a new instance for each excel sheet, here is how-to:

Open regedit, browse to HKEY_CLASSES_ROOT\Excel.Sheet.8\shell\Open

Delete the ddeexec key, (or just rename it)

Then click on the "command" key and replace the /dde on the end of the (Default) and command strings with "%1"

Quotes around %1 are important.

After the change, the lines should look like this:

(Default) REG_SZ "C:\Program Files (x86)\Microsoft Office\Office14\EXCEL.EXE" "%1"

command REG_MULTI_SZ xb'BV5!!!!!!!!!MKKSkEXCELFiles>VijqBof(Y8'w!FId1gLQ "%1"

Then do the same for Excel.Sheet.12

Now Both .xls and .xlsx should open in new windows with no errors.

I have tested this on my PC successfully. Hopefully it will also be useful for you!

File type association commands on Windows

Checking whether the association is already configured:

C:\MyRuby>assoc .rb

File association not found for extension .rb

Assuming that the association is not already configured, take the following steps to complete the configuration:

C:\MyRuby>assoc .rb=rbFile

Check to see if the file type rbfile already exists:

C:\MyRuby>ftype rbfile

File type 'rbfile' not found or no open command associated with it.

Assuming it does not already exist (be sure to substitute the path to your Ruby installation in the following command):

C:\MyRuby>ftype rbfile="D:\Ruby\bin\ruby.exe" "%1" %*

Verify the setting:

C:\MyRuby>ftype rbfile

rbfile="D:\ruby\bin\ruby.exe" "%1" %*

Add .rb to the PATHEXT environment variable as follows:

C:\MyRuby>set PATHEXT=.rb;%PATHEXT%

Once the above settings are configured simply run the program by typing the filename at the command prompt (the .rb filename extension is not required), e.g."

C:\MyRuby> hello

Hello Ruby

The above steps can be placed in your Autoexec.bat file if you would like this association made every time you reboot your system.

Change login screen on Windows 7

无需任何软件,简单修改Win7开机登陆界面背景图片,让您的电脑更为个性。

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Authentication\LogonUI\Background]

右侧新建一个双字节值“OEMBackground”(OEM版本的Win7已经有这个键值)→右击该双字节值→“修改”将键值修改为“0”。

Cloud

Aws

date: 2020-12-08 08:43:50.040000 author(s): Nazreen Mohamad

How to run macOS using Amazon EC2 Mac instances

AWS recently announced that you can now run Mac instances via EC2. The Mac instances are listed under a new instance family called ‘m1’. The two macOS versions listed are Catalina and High Sierra. Big Sur is not yet supported.

AWS Console: Make sure you are in a supported region

Currently macOS on EC2 is only available in these regions: US East (N. Virginia), US East (Ohio), US West (Oregon), EU (Ireland), and Asia-Pacific (Singapore). Make sure you’re in one of these regions before you carry on with the next steps.

AWS Console: Allocate a dedicated host

Firstly, because the macOS EC2 instance will be running on actual Mac Minis and not on a virtual machines, you require a dedicated host.

- Go to the EC2 Console.

- Under ‘Instances’, click on ‘Dedicated Hosts’.

- Click on the ‘Allocate Dedicated Hosts’ button (it’s the orange one).

- For the Dedicated Host settings page, input/select the following:

name — whatever you’d like instance family — mac1 support multiple instance types — disable this (it is enabled by default) Instance type — mac1.metal availability zone — any, Instance auto-placement — enabled Host recovery — disable this.

Click ‘Allocate’

AWS Console: Launch the instance

- Go to the EC2 console.

- Click ‘Launch Instance’

- Select ‘macOS Catalina 10.15.7’

- Click ‘Review and Launch’

- Use an existing key or create a new one. You’ll need the key for later.

If that failed, go to ‘Edit Instance Details’ and select manually the host that you created. Somehow the ‘auto-placement’ did not work for me.

Connect via VNC

So how can you connect to your macOS remotely? For that, you can use the VNC protocol.

- AWS Console: Update the security group your mac instance is in to allow port 5900 but make sure to only allow your own IP address as it’s insecure.

- SSH into the instance using the key from before. command: ssh -i

ec2-user@ - In the EC2 Mac instance: Start up the VNC server on your mac. Refer to the commands here, but note that the change password line does not work. For that, use the below:

echo “passwordhere” | perl -we ‘BEGIN { @k = unpack “C*”, pack “H*”, “1734516E8BA8C5E2FF1C39567390ADCA”}; $_ = <>; chomp; s/^(.{8})./$1/; @p = unpack “C”, $; foreach (@k) { printf “%02X”, $ ^ (shift @p || 0) }; print “\n”’ | sudo tee /Library/Preferences/com.apple.VNCSettings.txt

Make sure to replace passwordhere with the actual password.

4. Download your VNC client on your local machine.

5. On the EC2 console, copy the IP address of our macOS ec2 instance.

6. Connect to your macOS ec2 instance via the VNC client. Use the obtained IP address from step 5 and the password you’ve set from step 2.

You should now be able to view your mac’s login screen:

Logging into your macOS instance

Ok so you can connect via VNC, but where’s the password for ec2-user?

For this, go back to your ssh session, and run the following to create a password for ec2-user:

sudo passwd ec2-user

You should be able to use that password to login in your VNC session to your Mac instance.

Let me know if that was useful! And if you have requests for other tutorials.

Potential error messages

Below is a list of possible error messages that you may encounter. These can all happen when you try to allocate a dedicated host.

The requested configuration is currently not supported. Please check the documentation for supported configurations.

I am currently in touch with someone in AWS in order to clarify on the correct configurations. I will update this story once I receive the clarification. UPDATE: the AWS contact has confirmed that this error pops up when there is a capacity issue. That means the selected AZ does not yet have a Mac dedicated host in it or it has run out.

Other errors:

“m1 instance family is not supported”

This is when you leave ‘Support multiple instance types’ enabled.

Insufficient capacity.

This is when you disable ‘support multiple instance types’ and select exactly ‘mac1.metal’. I am guessing that AWS has run out of Mac Minis to support any more Mac instances. I will confirm this and update this post.

Instance type ‘mac1.metal’ does not support host recovery.

This is when you enable ‘host recovery’. Disable it.

HTTPS setup in Amazon EC2

This answer is focused to someone that buy a domain in another site (as GoDaddy) and want to use the Amazon free certificate with Certificate Manager

This answer uses Amazon Classic Load Balancer (paid)see the pricing before using it

Step 1 - Request a certificate with Certificate Manager

Go to Certificate Manager > Request Certificate > Request a public certificate

On Domain name you will add myprojectdomainname.com and

*.myprojectdomainname.com and go on Next

Chose Email validation and Confirm and Request

Open the email that you have received (on the email account that you have buyed the domain) and aprove the request

After this, check if the validation status of myprojectdomainname.com and

*.myprojectdomainname.com is sucess, if is sucess you can continue to Step 2

Step 2 - Create a Security Group to a Load Balancer

On EC2 go to Security Groups > and Create a Security Group and add the http and https inbound

Step 3 - Create the Load Balancer

EC2 > Load Balancer > Create Load Balancer > Classic Load Balancer (Third option)

Create LB inside - the vpc of your project On Load Balancer Protocol add Http

and Https

Next > Select exiting security group

Choose the security group that you have create in the previous step

Next > Choose certificate from ACM

Select the certificate of the step 1

Next >

on Health check i've used the ping path / (one slash instead of /index.html)

Step 4 - Associate your instance with the security group of load balancer

EC2 > Instances > click on your project > Actions > Networking > Change Security Groups

Add the Security Group of your Load Balancer

Step 5

EC2 > Load Balancer > Click on the load balancer that you have created > copy

the DNS Name (A Record), it will be something like myproject-2021611191.us- east-1.elb.amazonaws.com

Go to Route 53 > Routes Zones > click on the domain name > Go to Records Sets

(If you are don't have your domain here, create a hosted zone with Domain Name: myprojectdomainname.com and Type: Public Hosted Zone)

Check if you have a record type A (probably not), create/edit record set with name empty, type A, alias Yes and Target the dns that you have copied

Create also a new Record Set of type A , name *.myprojectdomainname.com,

alias Yes and Target your domain (myprojectdomainname.com). This will make

possible access your site with www.myprojectdomainname.com and

subsite.myprojectdomainname.com. Note: You will need to configure your reverse

proxy (Nginx/Apache) to do so.

On NS copy the 4 Name Servers values to use on the next Step, it will be something like:

ns-362.awsdns-45.com ns-1558.awsdns-02.co.uk ns-737.awsdns-28.net ns-1522.awsdns-62.org

Go to EC2 > Instances > And copy the IPv4 Public IP too

Step 6

On the domain register site that you have buyed the domain (in my case GoDaddy)

Change the routing to http : <Your IPv4 Public IP Number> and select Forward

with masking

Change the Name Servers (NS) to the 4 NS that you have copied, this can take 48 hours to make effect

Network

IP header sample

距离矢量路由协议(distance vector) VS 链路状态路由协议(link-state

一、PK第一番

距离矢量:

运行距离矢量路由协议的路由器,会将所有它知道的路由信息与邻居共享,但是只与直连邻居共享!

链路状态:

运行链路状态路由协议的路由器,只将它所直连的链路状态与邻居共享,这个邻居是指一个域内(domain),或一个区域内(area)的所有路由器!

二、PK第二番

所有距离矢量路由协议均使用Bellman-Ford(Ford-Fulkerson)算法,容易产生路由环路(loop)和计数到无穷大(counting to infinity)的问题。因此它们必须结合一些防环机制:

split-horizon

route poisoning

poison reverse

hold-down timer

trigger updates

同时由于每台路由器都必须在将从邻居学到的路由转发给其它路由器之前,运行路由算法,所以网络的规模越大,其收敛速度越慢。

链路状态路由协议均使用了强健的SPF算法,如OSPF的dijkstra,不易产生路由环路,或是一些错误的路由信息。路由器在转发链路状态包时(描述链路状态、拓扑变化的包),没必要首先进行路由运算,再给邻居进行发送,从而加快了网络的收敛速度。

三、PK第三番

距离矢量路由协议,更新的是“路由条目”!一条重要的链路如果发生变化,意味着需通告多条涉及到的路由条目!

链路状态路由协议,更新的是“拓扑”!每台路由器上都有完全相同的拓扑,他们各自分别进行SPF算法,计算出路由条目!一条重要链路的变化,不必再发送所有被波及的路由条目,只需发送一条链路通告,告知其它路由器本链路发生故障即可。其它路由器会根据链路状态,改变自已的拓扑数据库,重新计算路由条目。

四、PK第四番

距离矢量路由协议发送周期性更新、完整路由表更新(periodic & full)

而链路状态路由协议更新是非周期性的(nonperiodic),部分的(partial)

http://sxzx.360doc.com/content/081231/18/36491_2235770.html

Internet 传输层协议

本章介绍了 Internet 传输层的两个重要协议 TCP 和 UDP ,包括这两种协议的报文格式和工作原理。特别地,本章详细介绍了 TCP 的连接建立与关闭,以及连接建立与关闭过程的状态转换。

http://www.360doc.com/content/07/0805/16/36481_654974.shtml

常见网络协议头结构图

IP:

TCP:

UDP:

传输层:

对于UDP协议来说,整个包的最大长度为65535,其中包头长度是65535-20=65515;

对于TCP协议来说,整个包的最大长度是由最大传输大小(MSS,Maxitum Segment Size)决定,MSS就是TCP数据包每次能够传

输的最大数据分段。为了达到最佳的传输效能TCP协议在建立连接的时候通常要协商双方的MSS值,这个值TCP协议在实现的时候往往用MTU值代替(需

要减去IP数据包包头的大小20Bytes和TCP数据段的包头20Bytes)所以往往MSS为1460。通讯双方会根据双方提供的MSS值得最小值

确定为这次连接的最大MSS值。

IP层:

对于IP协议来说,IP包的大小由MTU决定(IP数据包长度就是MTU-28(包头长度)。 MTU值越大,封包就越大,理论上可增加传送速率,但

MTU值又不能设得太大,因为封包太大,传送时出现错误的机会大增。一般默认的设置,PPPoE连接的最高MTU值是1492, 而以太网

(Ethernet)的最高MTU值则是1500,而在Internet上,默认的MTU大小是576字节

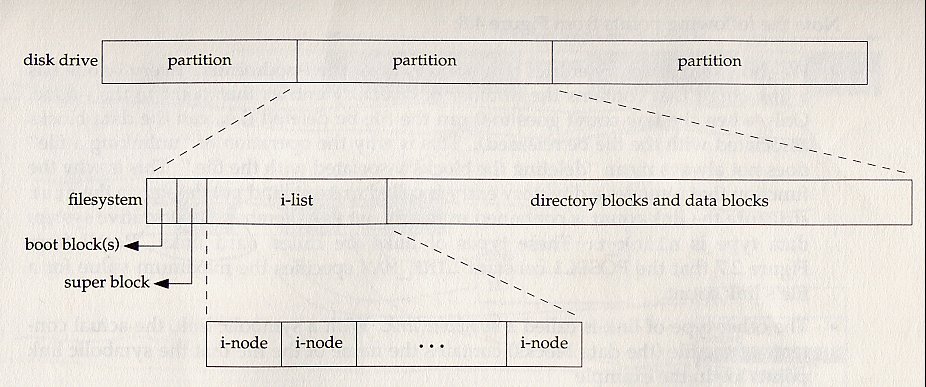

计算机网络教程

Please find attachments here: https://sites.google.com/site/xiangyangsite/home/technical-tips/network/networktutorial

Web

How to setup namecheap with hosted github static pages

namecheap settings for github pages:

A record @ 185.199.108.153 automatic

A record @ 185.199.109.153 automatic

A record @ 185.199.110.153 automatic

A record @ 185.199.111.153 automatic

cname record www hex0cter.github.io. 30 min

How to setup namecheap towards s3 bucket

| CNAME Record | @ | danielhan.dev.s3-website.eu-north-1.amazonaws.com. |

| CNAME Record | www | danielhan.dev.s3-website.eu-north-1.amazonaws.com. |

Note SSL does not come out of box.

Hardware

Difference between MultiCore and MultiProcessor

A CPU, or Central Processing Unit, is what is typically referred to as a processor. A processor contains many discrete parts within it, such as one or more memory caches for instructions and data, instruction decoders, and various types of execution units for performing arithmetic or logical operations.

A multiprocessor system contains more than one such CPU, allowing them to work in parallel. This is called SMP, or Simultaneous Multiprocessing.

A multicore CPU has multiple execution cores one one CPU. Now, this can mean different things depending on the exact architecture, but it basically means that a certain subset of the CPU's components is duplicated, so that multiple "cores" can work in parallel on separate operations. This is called CMP, Chip-level Multiprocessing.

For example, a multicore processor may have a separate L1 cache and execution unit for each core, while it has a shared L2 cache for the entire processor. That means that while the processor has one big pool of slower cache, it has separate fast memory and artithmetic/logic units for each of several cores. This would allow each core to perform operations at the same time as the others.

There is an even further division, called SMT, Simultaneous Multithreading. This is where an even smaller subset of a processor's or core's componenets is duplicated. For example, an SMT core might have duplicate thread scheduling resources, so that the core looks like two separate "processors" to the operating system, even though it only has one set of execution units. One common implementation of this is Intel's Hyperthreading.

Thus, you could have a multiprocessor, multicore, multithreaded system. Something like two quad-core, hyperthreaded processors would give you 2x4x2 = 16 logical processors from the point of view of the operating system.

Different workloads benefit from different setups. A single threaded workload being done on a mostly single-purpose machine benefits from a very fast, single-core/cpu system. Workloads that benefit from highly-parallelized systems such as SMP/CMP/SMT setups include those that have lots of small parts that can be worked on simultaneously, or systems that are used for lots of things at once, such as a desktop being used to surf the web, play a Flash game, and watch a video all at once. In general, hardware these days is trending more and more toward highly parallel architectures, as most single CPU/core raw speeds are "fast enough" for common workloads across most models.

Life

- Gmail for nokia e72

- How to combine ovpn files

- How to banish embarrassing or annoying autocomplete suggestions from your browser

- Image to text (ocr) for swedish

- Exchange for gmail on iphone

- Rotate an image automatically on linux

- Gmail setup instructions on nokia phone

- Retreive saved wifi password on symbian

- Help:cheatsheet

- Ocr translate letters to your own language

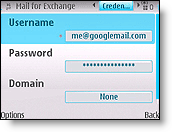

Gmail for Nokia E72